Open Problems (& Opportunities) for AI: Summary Notes from the Conference

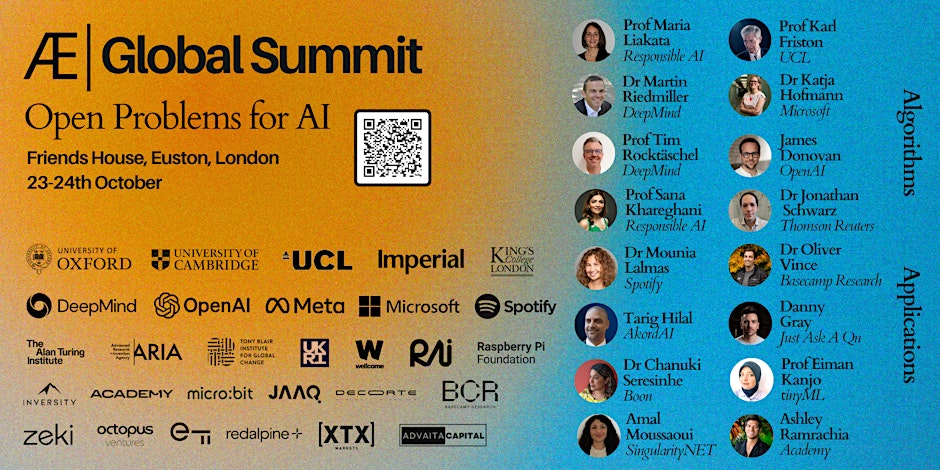

Last week I attended the Algorithmic Innovation & Entrepreneurship Summit on Open Problems for AI. That’s a bit of a mouthful but in essence, it was a 2-day conference covering the challenges and opportunities for AI over the next 5 years.

Day 1 was focussed on algorithms and AI techniques, while Day 2 focussed on applications.

In the post below I’ll share some of the most interesting takeaways that stuck with me. You can also find summaries of all the talks here.

I found most of the content accessible (thanks to my 100 days of AI learning). However, there were some ideas, such markov blankets and probabilistic programming, that were so foreign I had to come back to them after the event.

Still, you’ll find that the highlights below don’t need a technical foundation to appreciate.

Fun Facts

💧 A 100-word email generated by an AI chatbot like ChatGPT requires about 500ml of water to cool data centers. If 10% of working Americans used this tool weekly, we would need 435 billion liters of water to cool the machines responsible.

🐴 The best way to train to be a prompt engineer might be to meditate more. Turns out, having an introspective awareness of your own thought processes could be key to unlocking better LLM outputs.

🤖 That said, you don’t actually need technical skills to use AI well. Three-quarters of non-tech companies have used ChatGPT at least once, even though the technology is less than two years old.

Conference Highlights

The holy grail of artificial general intelligence (an AI that can do anything a human can) will require open-ended learning. This means we need to find a way to have AIs set their own objectives in the real world, and learn openly and endlessly, just as humans do.

Training AI is currently very inefficient. For example LLMs are being trained on trillions of tokens (“the whole internet”, as one speaker put it), which is wasteful since you get mixed quality and redundant information. Smarter techniques are emerging where you get better results with 50-90% less data.

Using GenAI is also expensive in environmental terms. One speaker shared the example that just one AI-generated email consisting of 100 words requires 500ml of water to cool data centers. Scaling this to the working population of the USA using such a tool weekly would require 435bn liters of water annually.

We must be cognizant of the many key weaknesses of LLMs. Issues such as bias, privacy and data leaks, as well limited or no explainability in how AI makes decisions are worth highlighting.

There are also limits to techniques like reinforcement learning. For instance, it assumes perfect feedback mechanisms, complete access to all parts of a system, and perfect environments—none of which are reflective of the real world. We need to explore how we can train AI systems in more realistic and rough environments.

The ability for LLMs to reason is a promising path. For example OpenAI’s recent O1 models have been taught how to reason, rather than just generate text. OpenAI expect these models to help advance scientific research.

Despite the AI hype, adoption and productivity improvements are yet to take off. Lots of people and organizations have experimented with ChatGPT but transformational changes are yet to be seen. I think software engineers are seeing the most benefits so far with coding co-pilots. Text summarisation and structuring unstructured data is also useful, but radical features such as AI agents doing human work are yet to create meaningful value.

AI and education haven’t yet seen radical adoption either. The space has the potential to offer adaptive and personalised learning, which could transform education outcomes. Whether that’s text-to-speech systems that help students with dyslexia, or personalized learning pathways for students depending on their ability. There’s lots of potential here but adoption has been slow.

AI in medicine is also facing adoption challenges. The technology is there but it’s tough to deploy it. Obstacles include implementation hurdles (e.g. in the NHS), incentive structures where treatment rather than prevention is prioritised, and challenging customer behaviours (e.g. patients not adhering to monitoring device use).

Future AI directions will require moving beyond just massive data and computing power. The field is shifting toward the smarter use of data through prioritization, noise filtering, and synthetic data generation. The conference also covered sample-efficient algorithms, open-ended systems that learn on their own, multimodal AI that integrates language, vision, and actions in the real world, as well as multi-agent systems. These developments will hopefully be guided by evaluation frameworks that focus on what the world actually needs.